A bit about me

Hi! My name is Jen and I am a Ph.D. student in machine learning at IST Austria, advised by Dr. Dan Alistarh. I was also fortunate to intern with Weiwei Yang and Janardhan Kulkani at Microsoft Research.

I am primarily interested in the practical and theoretical foundations of applying machine learning and deep learning models in real-life–specifically, questions of efficiency, fairness, bias, and general model interpretability. I study these specifically in the context of edge-device deployment and other constrained-resource environments.

Before starting at IST, I spent some time in industry: I traded equity options on Wall Street, taught math and science to high-school students in San Francisco, helped build a healthcare startup, and built and deployed deep learning models for text recognition at Google LA.

I am a community lead at Cohere for AI, where I host talks on using ML for social good.

Publications

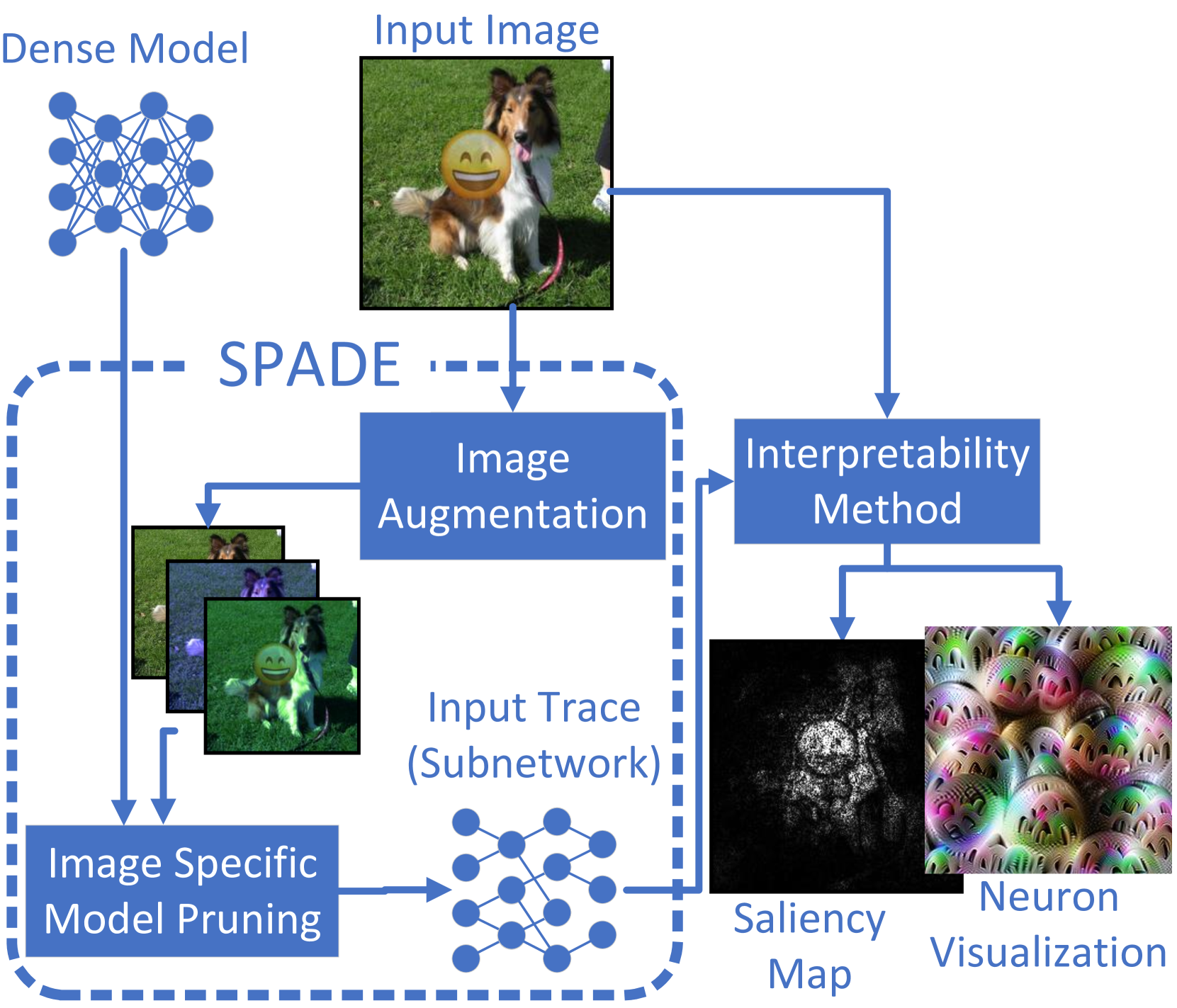

SPADE: Sparsity-Guided Debugging for Deep Neural Networks.

ICML 2024, 2024-07-21

We improve DNN interpretability by computing a sparse trace of an input through a model prior to running interpretability methods.

Download here

Panza: A Personalized Text Writing Assistant via Data Playback and Local Fine-Tuning

ArXiv, 2024-06-24

We demonstrate the feasibility of training an e-mail composition assistant entirely on a consumer-grade GPU.

Download here

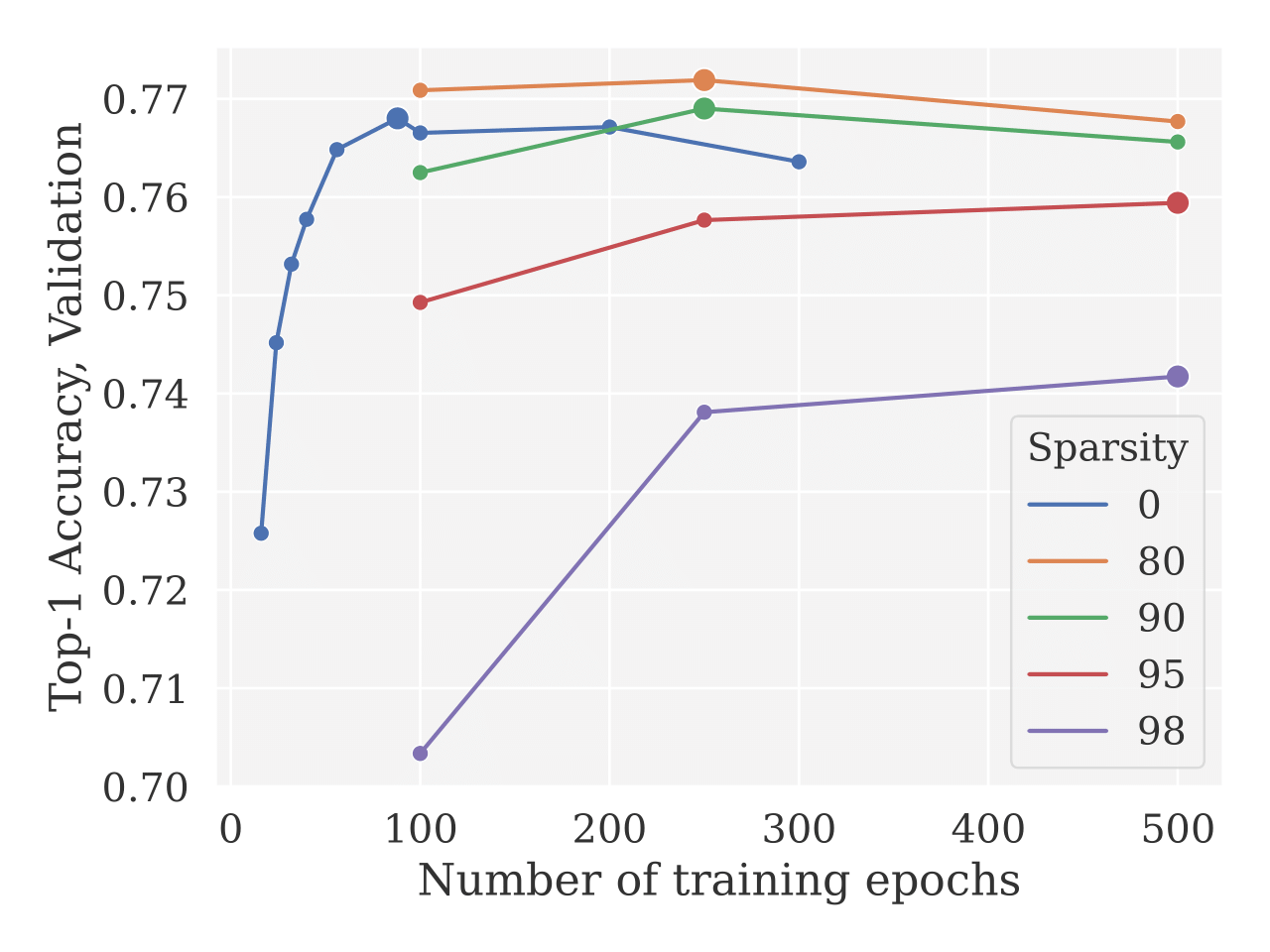

Accurate Neural Network Pruning Requires Rethinking Sparse Optimization

TMLR, 2024-06-20

We show that, generally speaking, dense training settings are not optimal for sparse training for the same dataset/architecture.

Download here

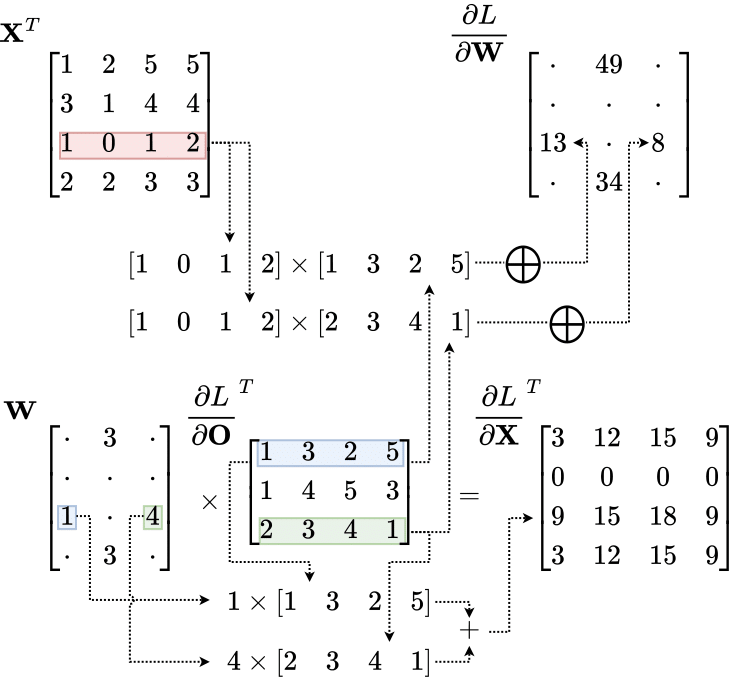

SparseProp: Efficient Sparse Backpropagation for Faster Training of Neural Networks

ICML 2023, 2023-07-23

My groupmates found a way to do faster backpropagation through unstructured-sparsity weights.

Download here

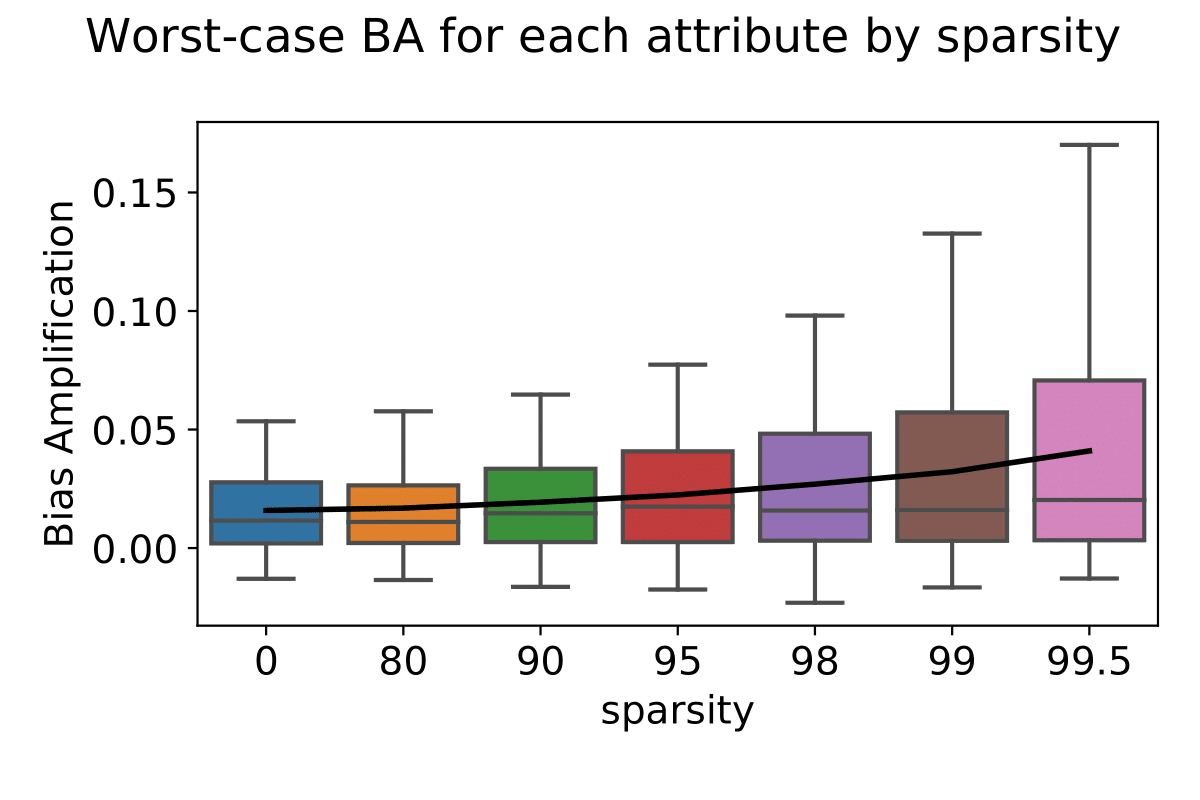

Bias in Pruned Vision Models: In-Depth Analysis and Countermeasures

CVPR 2023, 2023-06-18

We demonstrate that ‘stereotyping’, i.e., amplifying feature correlations, increases with model sparsity, thus leading to increased bias.

Download here

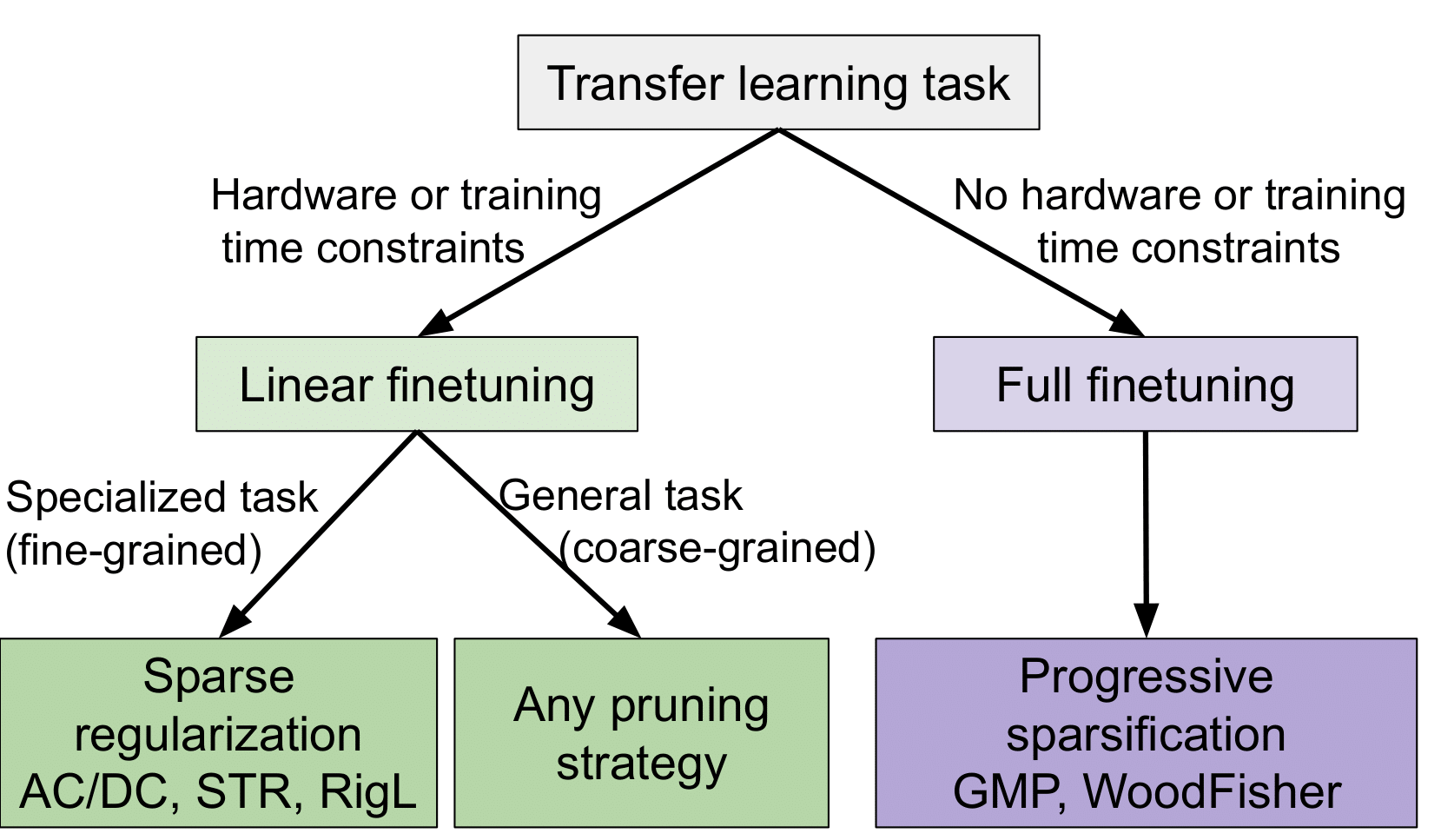

How Well Do Sparse ImageNet Models Transfer?

CVPR 2022, 2022-06-20

We investigate the effect of the pruning method on transfer learning.

Download here

FLEA: Provably Fair Multisource Learning from Unreliable Training Data

Preprint, 2021-06-22

In which we propose a theoretically rigorous algorithm for detecting and eliminating malignant (perturbed) data in a multisource setup.

Download here

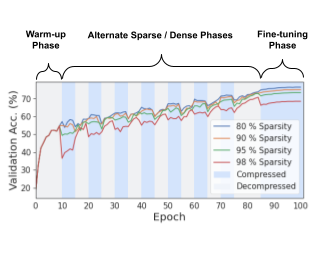

AC/DC: Alternating Compressed/DeCompressed Training of Deep Neural Networks

NeurIPS 2021, 2021-05-21

In which we refine the principle of Iterative Hard Thresholding to propose a simple and effective protocol for unstructured sparse training.

Download here

The VRNetzer platform enables interactive network analysis in Virtual Reality

Nature Communications, 2021-01-19

3D interactive platform to explore biological networks

Download here

EpiMath Austria SEIR: A COVID-19 Compartment Model for Austria

Preprint, 2021-01-19

Modeling the spread of COVID-19 in Austria

Download here

CV

Download my CV here.